02. Two-Layer Neural Network

Multilayer Neural Networks

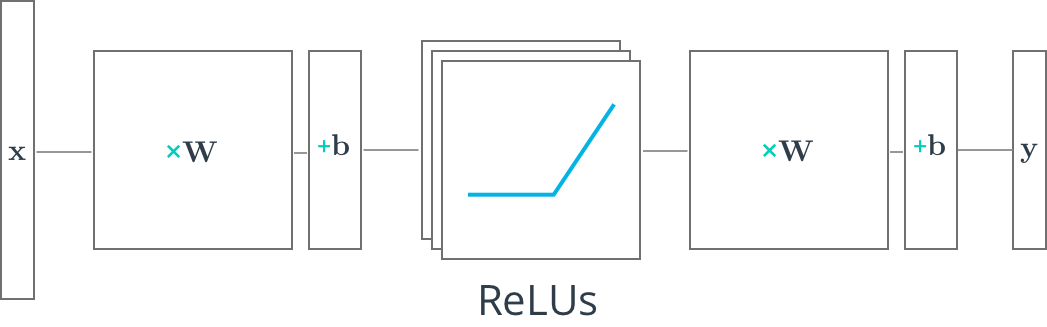

In this lesson, you'll learn how to build multilayer neural networks with TensorFlow. Adding a hidden layer to a network allows it to model more complex functions. Also, using a non-linear activation function on the hidden layer lets it model non-linear functions.

We shall learn about ReLU, a non-linear function, or rectified linear unit. The ReLU function is 0 for negative inputs and x for all inputs x >0.

Next, you'll see how a ReLU hidden layer is implemented in TensorFlow.